Stream overview

I currently work on AI control and I'm excited about e.g. prototyping and evaluating mitigations for specific threat models, doing science that sheds light on important considerations related to AI control, or building evals for control-related capabilities.

It's hard for me to give specific project descriptions so far in advance, but here's a selection of projects I've been excited about in the past (some of which are now papers!). You can expect the actual projects to be of similar flavour but not exactly the same:

- study the advantages and disadvantages of chain-of-thought vs action monitoring

- study a version of untrusted monitoring where the monitor is an honesty-tuned version of the agent

- develop a setting for studying the insider data poisoning threat model, then study attacks and defences in the setting

- develop consistency as a sabotage detection methodology and evaluate it on model organisms

- do a control evaluation of research sabotage

- study debate between two untrusted models

- develop a scheming thoughts dataset for calibrating chain-of-thought monitors

- develop evaluations for scheming precursor evals (these could be used by labs for pre-control safety cases, and for advocating for control)

Mentors

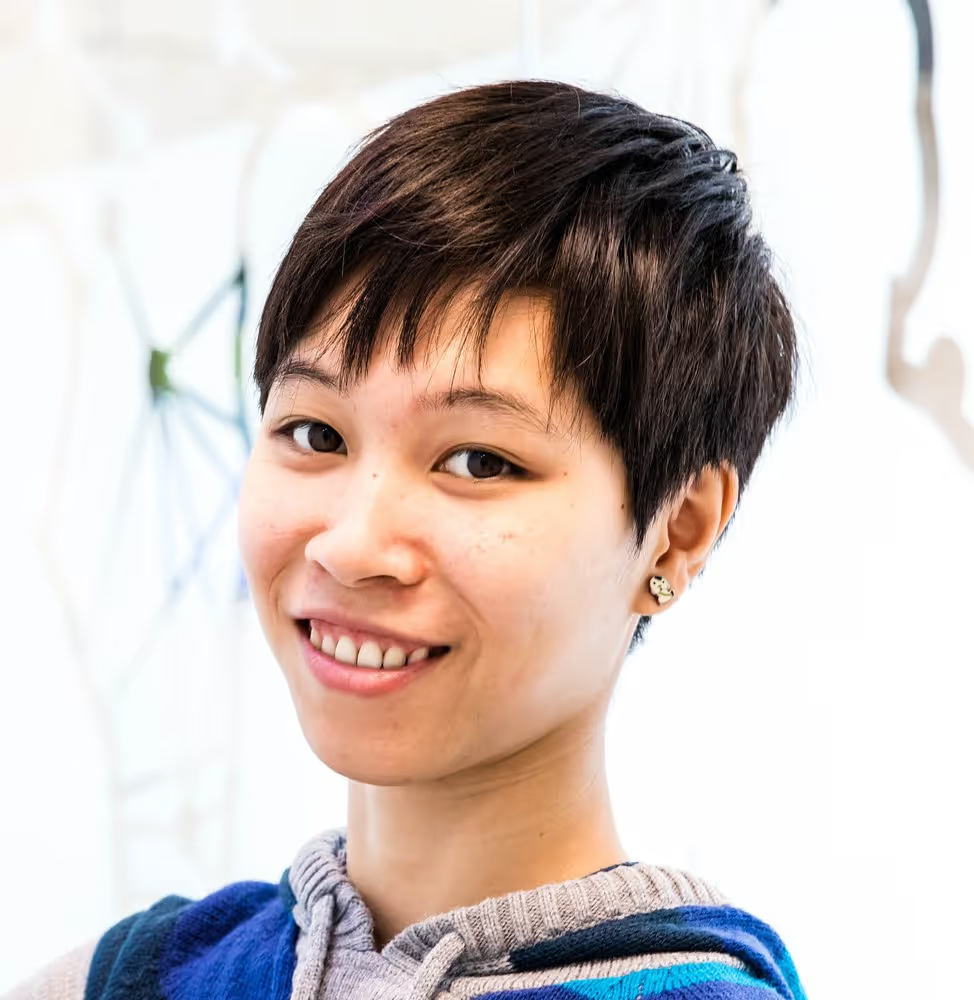

Mary is a research scientist on the Frontier Safety Loss of Control team at DeepMind, where she works on AGI control (security and monitoring). Her role involves helping make sure that potentially misaligned, internally deployed models cannot cause severe harm or sabotage, even if they wanted to. Previously, she has worked on dangerous capability evaluations for scheming precursor capabilities (stealth and situational awareness) as well catastrophic misuse capabilities.

Mentorship style

I'm pretty hands-off. I expect scholars to fully take charge of the project, and update / consult me as needed. I do want my scholars to succeed, and am happy to advise on project direction, experiment design, interpreting results, decision-making / breaking ties, or getting unstuck.

During the program, we'll meet once a week to go through any updates / results, and your plans for the next week. I'm also happy to comment on docs, respond on Slack, or have additional ad hoc meetings when useful.

Representative papers

https://arxiv.org/abs/2511.06626

https://arxiv.org/abs/2505.23575

https://arxiv.org/abs/2505.01420

https://arxiv.org/abs/2412.12480

https://static1.squarespace.com/static/660eea75305d9a0e1148118a/t/68fb7d6f70f3d60ca3aabd88/1761312111659/2025LASRdata_poisoning.pdf

Scholars we are looking for

- Collaborative ML/SW engineering: You can write high-quality code independently and quickly (esp. open-source LLM experiments, e.g. training, manipulating internals, scaffolding / building agents, Inspect). You can debug code. You can work with others, e.g. you can navigate a codebase written not entirely by you and build on it productively.

- Familiarity with capability evaluations, model organisms, and especially AI control (e.g. you know about trusted / untrusted monitoring, why CoT monitoring is a good idea, incrimination). This makes it much easier for us to communicate and be on the same page about the goals of the project, and is important for making day-to-day project decisions.

- Conceptual research ability: You can come up with ideas for interesting experiments that align with the project plan. You prioritise well. (E.g. you've published a paper where you were the primary person making experiment design decisions.)

- Good communication, team player: You can clearly communicate your research ideas, experimental methodology, results, etc, verbally or in writing (writing is more important). You can notice and express confusion, uncertainty, disagreement, or dissatisfaction and are willing to work through conflicts. You impartially consider other people’s ideas, disagree respectfully, can admit when you’re wrong, and are willing to commit to the team’s direction.

I prefer scholars to work in pairs or groups of three within the stream, but happy to take on external collaborators as long as they are committed full-time to the project.

Project selection

I'll propose ~5 projects for scholars to red-team, flesh out and decide on one to own. I'm also open to scholar-proposed projects if they sound promising; I'd just be less useful as an advisor.