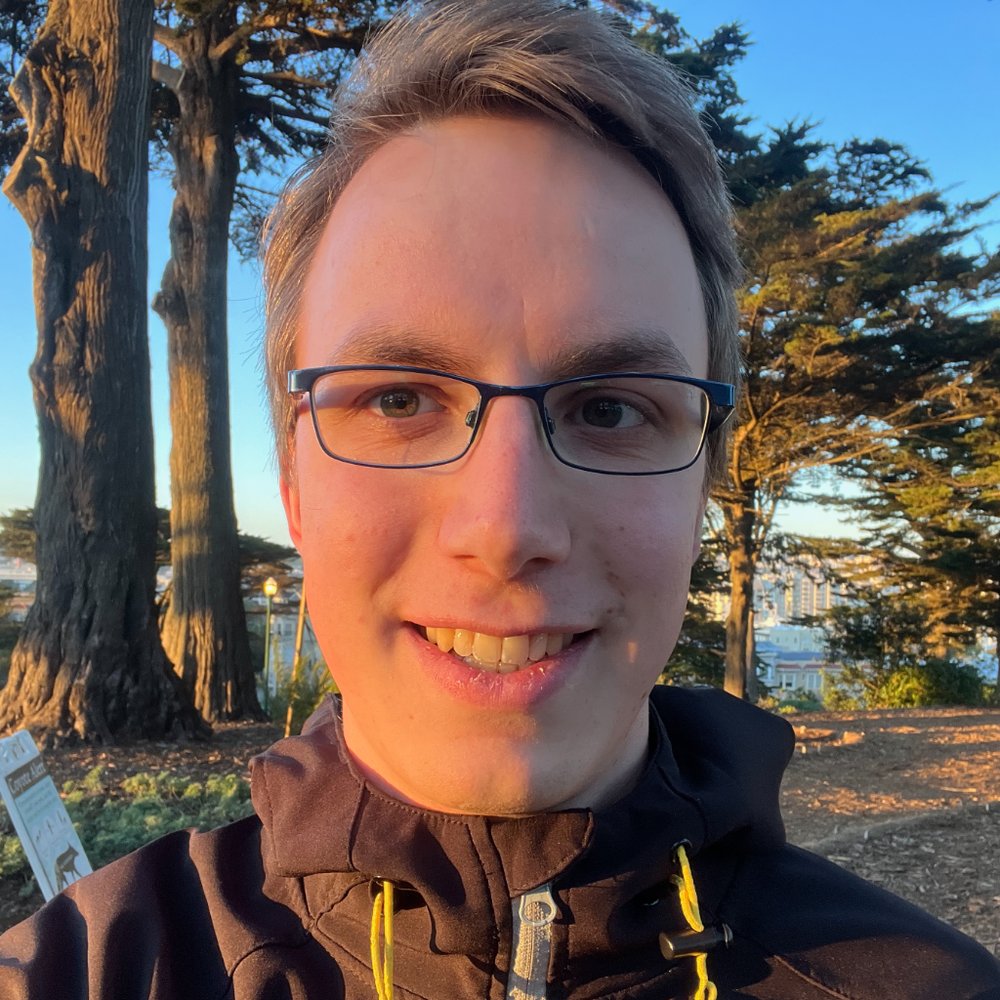

Erik Jenner

Google DeepMind

—

Research Scientist

Erik is a Research Scientist on the AGI Safety & Alignment team at Google DeepMind. They are the main team at Google DeepMind working on technical approaches to existential risk from AI systems; Erik joined in Feb 2025. He worked on [a

paper on chain-of-thought monitoring](https://arxiv.org/abs/2507.05246) and more broadly is focused on AI control.

Previously, Erik was a PhD student at the Center for Human-Compatible AI (CHAI) at UC Berkeley, advised by Stuart Russell, where he worked on stress-testing white-box monitors among other projects.

He has worked with over a dozen mentees through MATS, CHAI internships, and other programs.